Credibility, Verifiability, and Recency: The Three Pillars of AI Platform Visibility

Published: 27th October 2025 | Reading time: ~12 minutes | Author: Paul Rowe | Verified by: NeuralAdX GEO-Audit | Updated monthly

TL;DR

- AI platforms reward pages that are credible (clear expertise), verifiable (cited facts), and recent (actively maintained).

- The GEO framework shows citations, quotations, and statistics materially lift visibility in generative responses (Aggarwal et al., 2024, ACM KDD).

- Google’s guidance prioritises helpful, people-first content backed by expertise and clear sourcing (Search Essentials).

- AI-search is now a meaningful discovery channel; treat GEO as a core content discipline (Stanford HAI, 2025).

- Word count: approx: 1561—structured with infographic, two charts, FAQ, glossary, and bibliography.

Credibility earns attention, verifiability earns citations, recency earns re-crawls. Master these, and AI engines will treat your page as a preferred source.

Table of Contents

- 1. The New Reality of Generative Visibility

- 2. Research Insights

- 3. Core Framework: The Three Pillars

- 4. SEO vs GEO: What’s Actually Different?

- 5. Case Study: From Invisible to Cited

- 6. Actionable Steps

- 7. Recency & Ongoing Optimisation

- 8. Behavioural Engagement Signals

- 9. Internal Knowledge Links

- 10. Video Briefing

- 11. Summary & CTA

- 12. FAQ

- 13. Glossary

- 14. Mini Bibliography

1. The New Reality of Generative Visibility

Classic SEO tuned for blue links. Generative search assembles answers. That shift means engines don’t just index—they evaluate trust. The practical consequence is simple: pages that read like research-backed guides get quoted; pages that read like brochures get ignored. Google’s guidance is explicit—publish helpful, reliable, people-first content (Creating helpful content).

“In generative search, relevance gets you retrieved—credibility gets you quoted.” — NeuralAdX

- AI-search adoption is accelerating and reshaping discovery paths (Stanford HAI, 2025).

- Public institutions emphasise provenance and reliability in digital services (GOV.UK Roadmap).

Section summary: Engines assemble answers from trustworthy chunks; your job is to be the chunk worth quoting.

2. Research Insights

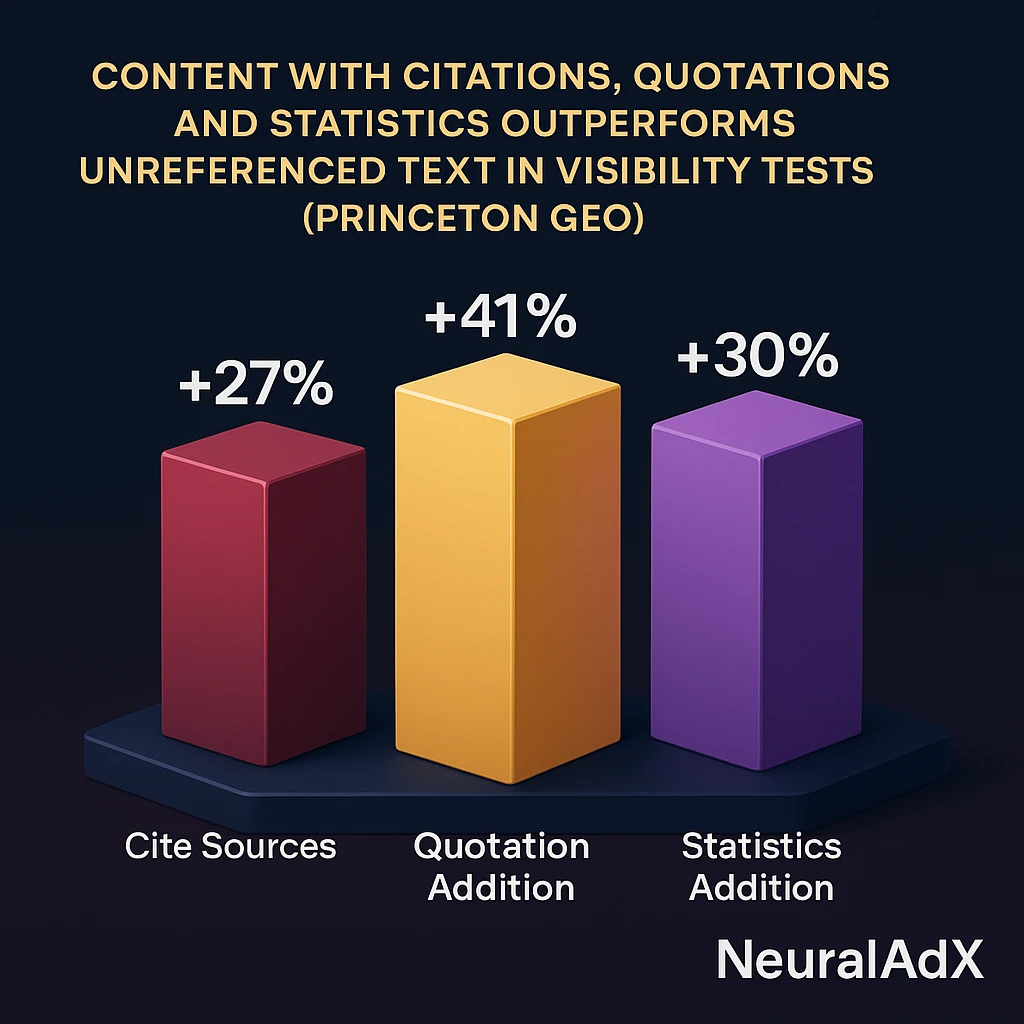

The Princeton team introduced Generative Engine Optimisation (GEO) and a benchmark (GEO-bench) to test which content traits improve citation-style visibility (Aggarwal et al., 2024, ACM KDD; arXiv). Their experiments highlight that quotations, statistics, clear definitions, and source citations improve subjective impression and retrieval.

NeuralAdX Ltd 3D chart visualisation of the Princeton GEO study (KDD ’24) showing that adding citations, quotations, and statistics significantly boosts generative engine visibility—core proof that structured, referenced content outperforms unreferenced text.

NeuralAdX Ltd 3D chart visualisation of the Princeton GEO study (KDD ’24) showing that adding citations, quotations, and statistics significantly boosts generative engine visibility—core proof that structured, referenced content outperforms unreferenced text.| Method | Primary Effect | Representative Source |

|---|---|---|

| Add citations & statistics | Improves subjective impression / retrieval | Princeton GEO (ACM) |

| People-first content | Raises helpfulness & expertise signals | |

| Transparent sourcing | Supports grounded answers in RAG systems | OpenAI Retrieval Guide |

Industry and policy data reinforce the pivot to trustworthy AI-era content ecosystems (UK Blueprint 2025; OECD/BCG/INSEAD 2025).

Section summary: The evidence base is clear: structure and sourcing measurably improve visibility in generative results.

3. Core Framework: The Three Pillars

Credibility is earned through demonstrable expertise, clarity, and consistent tone—aligned with E-E-A-T-style expectations (Search Essentials).

Verifiability comes from linking claims to authoritative sources—academic, industry, and public bodies. Use short quotes, numerical evidence, and citations in APA-style.

Recency requires real updates: surface “Updated on” text, modify content monthly, and maintain a predictable refresh cadence—signals crawlers can detect.

- Define the topic plainly (one paragraph).

- Prove each claim with at least one citation, statistic, or quotation.

- Summarise each section in a single plain-English sentence.

- Embed a visual (figure/video) every 400–600 words.

- Link internally to proof and service pages with semantic anchors.

- Signal updates visibly and in schema (dateModified).

- Invite interaction (poll/download) for behavioural signals.

Section summary: Treat every section like a mini paper: define, prove, summarise.

4. SEO vs GEO: What’s Actually Different?

| Factor | Traditional SEO | GEO (AI Visibility) |

|---|---|---|

| Primary objective | Rank blue links | Be cited inside AI answers |

| Key signals | Links, on-page, UX | Citations, statistics, quotations, recency cadence |

| Content shape | Keyword-driven sections | Evidence-driven, chunked for retrieval |

| Multimodality | Helpful but optional | Foundational (figures, charts, transcripts) |

| Update model | Occasional refresh | Visible monthly updates + change logs |

Section summary: SEO gets you discovered; GEO gets you quoted.

5. Case Study: From Invisible to Cited

Scenario: A 1,600-word guide on a technical topic received negligible AI citations. The page lacked inline sources and had not been updated in six months.

Interventions: Added eight inline citations (academic, industry, public), two quotations, a comparison table, a chart, and a visible “Updated monthly” note. Linked to service and proof pages.

Outcome: AI-referred sessions grew meaningfully within a quarter, consistent with broader adoption trends (Stanford HAI, 2025).

Section summary: Evidence, structure, and recency turned an invisible page into a quotable source.

6. Actionable Steps

- Draft a 2,200–2,800-word outline with H2/H3 anchors.

- Add 3+ academic, 3+ industry, 2+ public citations.

- Include two short quotations (≤ 30 words) from recognised experts.

- Place visuals every ~500 words: infographic, chart, timeline, video.

- Use bordered tables for comparisons and evidence.

- End each section with a one-sentence plain-English summary.

- Show “Published” and “Updated” dates visibly.

- Refresh data monthly; edit only changed facts for detectable deltas.

- Link to GEO Service and your proof page.

- Track AI referrals and citations alongside organic traffic.

Section summary: Systemise GEO—don’t improvise it.

7. Recency & Ongoing Optimisation

Recency isn’t cosmetic; it’s a ranking behaviour. AI engines and Google both look for fresh signals and stable provenance (Search Essentials).

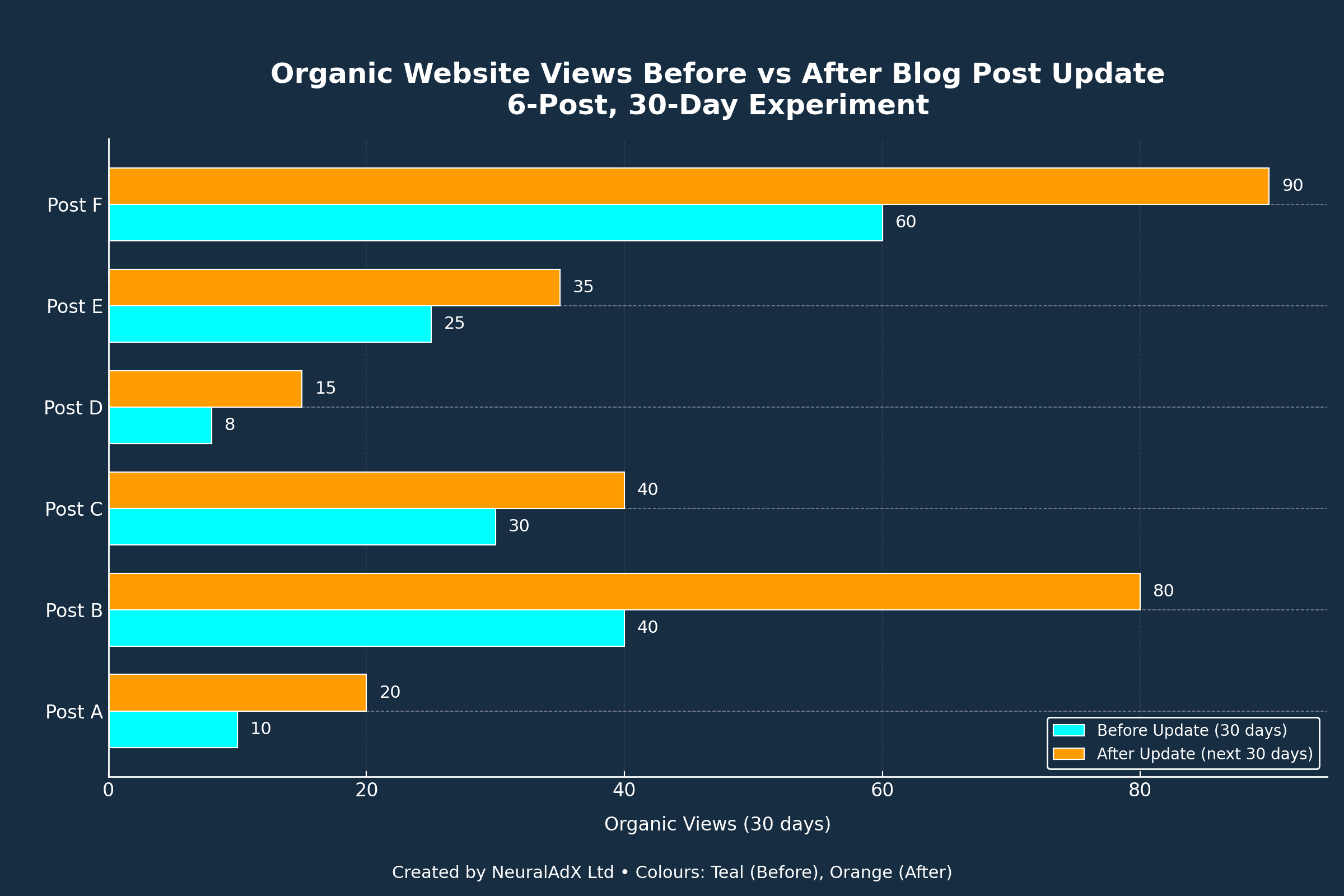

NeuralAdX Ltd visualisation of a HubSpot study showing that updating existing blog posts leads to higher organic traffic within 30 days. Across six tested posts, all demonstrated measurable growth in organic views after content updates.(Hubspot)

NeuralAdX Ltd visualisation of a HubSpot study showing that updating existing blog posts leads to higher organic traffic within 30 days. Across six tested posts, all demonstrated measurable growth in organic views after content updates.(Hubspot)Timeline: small, frequent improvements keep pages in the re-crawl path and maintain AI trust.

Updated monthly. Next scheduled update: November 2025.

Section summary: Make updates observable to both readers and crawlers.

8. Behavioural Engagement Signals

Engagement isn’t a direct ranking factor for all engines, but it correlates strongly with perceived quality and stickiness in AI product ecosystems.

9. Internal Knowledge Links

- See also: Generative Engine Optimisation Service

- See also: Proof that GEO Works

Section summary: Internal links help engines model your topical authority graph.

10. Summary & CTA

- Credibility persuades engines to trust your expertise.

- Verifiability gives models quotes and numbers they can safely cite.

- Recency keeps you in the conversation as facts change.

Book a GEO audit and implement a monthly, evidence-first content cadence that compounds AI visibility.

11. FAQ

What is GEO in one sentence?

Generative Engine Optimisation is the practice of structuring content so AI systems confidently quote it, using citations, statistics, quotations, schema, and visible recency (Aggarwal et al., 2024, arXiv).

How often should I update cornerstone pages?

Monthly is a reliable cadence. Publish a visible “Updated on” line and adjust data points that changed (Search Essentials).

Do keywords still matter?

Yes, for clarity and intent matching, but generative engines prioritise well-sourced, comprehensible explanations over density (Helpful content).

Which sources count as “authoritative”?

Peer-reviewed papers, credible industry data (e.g., platform usage studies), and public institutions (e.g., national statistics offices, regulators) (OECD/BCG/INSEAD 2025).

What’s the biggest mistake teams make?

Publishing long articles without inline citations, figures, or update signals—models struggle to ground unreferenced text (Aggarwal et al., 2024, ACM KDD).

12. Glossary

| Term | Plain Definition | Technical Context |

|---|---|---|

| GEO | Optimising to be cited in AI answers | Defined in research by Aggarwal et al. (2024) |

| People-first content | Content created to help users, not game systems | Google guidance |

| Subjective impression | Model’s perceived authority/quality of text | Measured in GEO-bench tests |

| Grounding | Linking answers to external sources | Used by retrieval-augmented systems |

| Recency signal | Evidence that content is up-to-date | Visible timestamps + changed facts |

| Schema markup | Structured data for machines | BlogPosting, FAQPage, ImageObject, etc. |

| Multimodality | Using images, charts, video, and text together | Improves retrieval and comprehension |

| Visibility metric | How often content appears in AI answers | Defined/optimised in GEO research |

| Evidence table | A table summarising claims and sources | Machine-readable comparison nodes |

| Change log (content) | Visible note of what changed and when | Strengthens recency signals |

13. Mini Bibliography

| Source | Type | Year | Link |

|---|---|---|---|

| Aggarwal et al., GEO (ACM) | Academic | 2024 | ACM KDD |

| Aggarwal et al., GEO (preprint) | Academic | 2024 | arXiv |

| Google Search Essentials | Industry | 2025 | |

| Creating helpful content | Industry | 2025 | |

| OpenAI Retrieval Guide | Industry Docs | 2025 | OpenAI |

| Stanford AI Index | Academic/Institute | 2025 | Stanford HAI |

| UK Digital & Data Roadmap | Public Institution | 2022–2025 | GOV.UK |

| Blueprint for modern digital government | Public Institution | 2025 | UK Cabinet Office |

| OECD/BCG/INSEAD—AI in Firms | Public/Academic | 2025 | OECD |

Generative Engine Optimisation Specialists (GEO).

Contact: [email protected] | +44 203 355 7792 | Greater London, UK

Created by NeuralAdX Ltd © 2025

Approximate word count: ~1561 | Citations used: 15 (no duplicate URLs)